Machine Learning

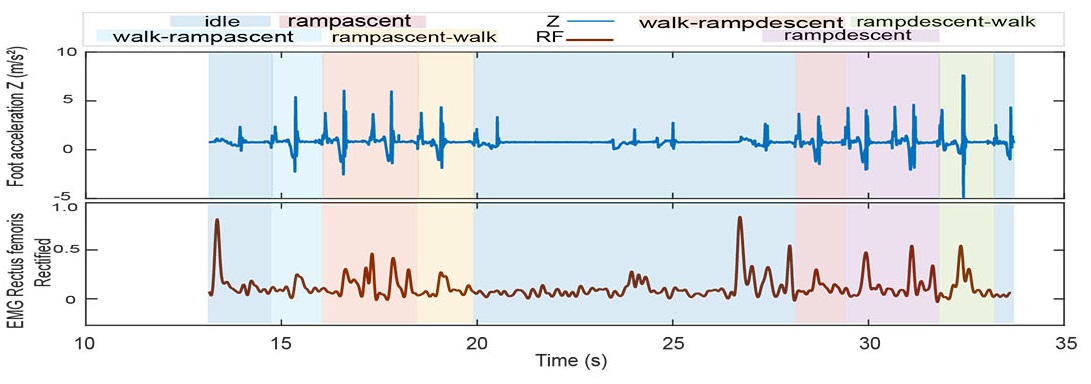

In wearable robotics, it is desired that the augmentation systems recognize the user activity without requiring manual input that imposes additional efforts from the user. Using machine learning methods, I create models that can predict the user's intent and other state parameters that describe the locomotion. With this information, the controllers can change behaviors and adjust operation parameters to naturally accommodate the user's task. The process consists of using windowed sample data from wearable sensors and recognize the patterns within this information. As the gait changes, the different signals change patterns—for example, the acceleration at the foot or the activation in the rectus femoris muscle.

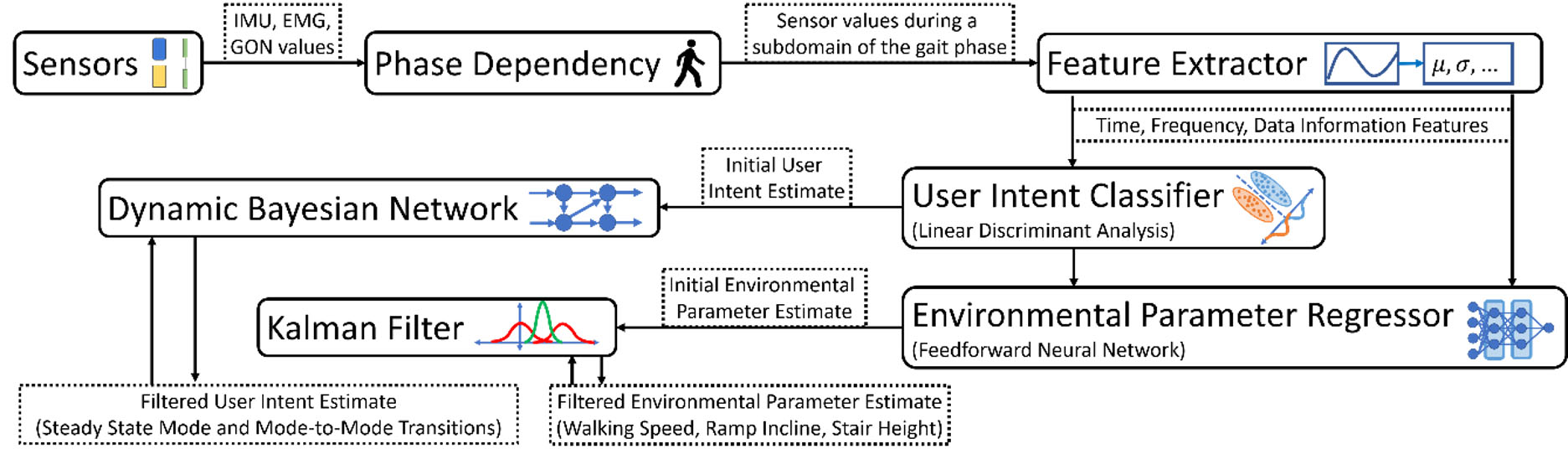

In the Transactions in Biomedical Engineering I presented the workflow for combining the classification of ambulation modes, together with the regression of walking speed, ramp incline, and stair height. This paper covers a thorough analysis of the relevance of the different data channels to accurately achieve predictions on healthy individuals. It studies the dependency on the gait phase, window size and network architecture, providing the foundation for machine learning projects with wearable robotics in the EPIC lab.

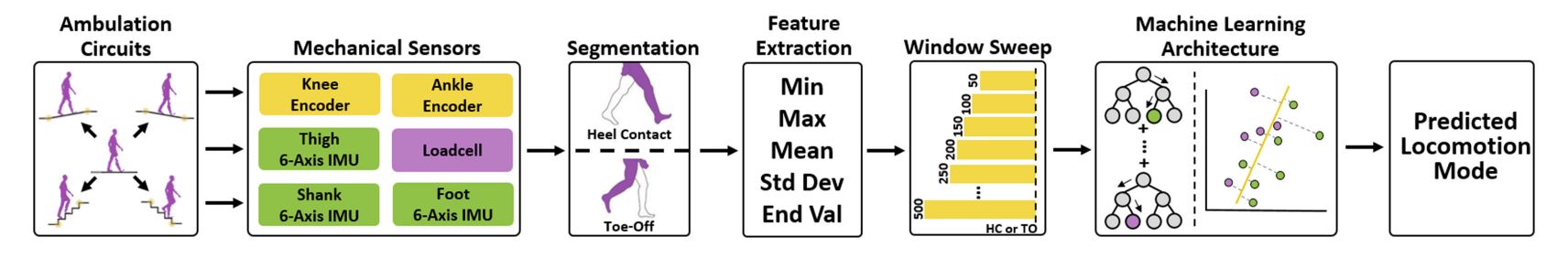

This machine learning strategy can be applied to prostheses. In that case, by limiting the sensors to the ones embedded in the device, we simplify the setup and improve its comfort. In our article in Robotics and Automation Letters, we presented the classification part of the framework in both user-dependent and user-independent versions, demonstrating that the models can infer results in different configurations of stairs and ramps.

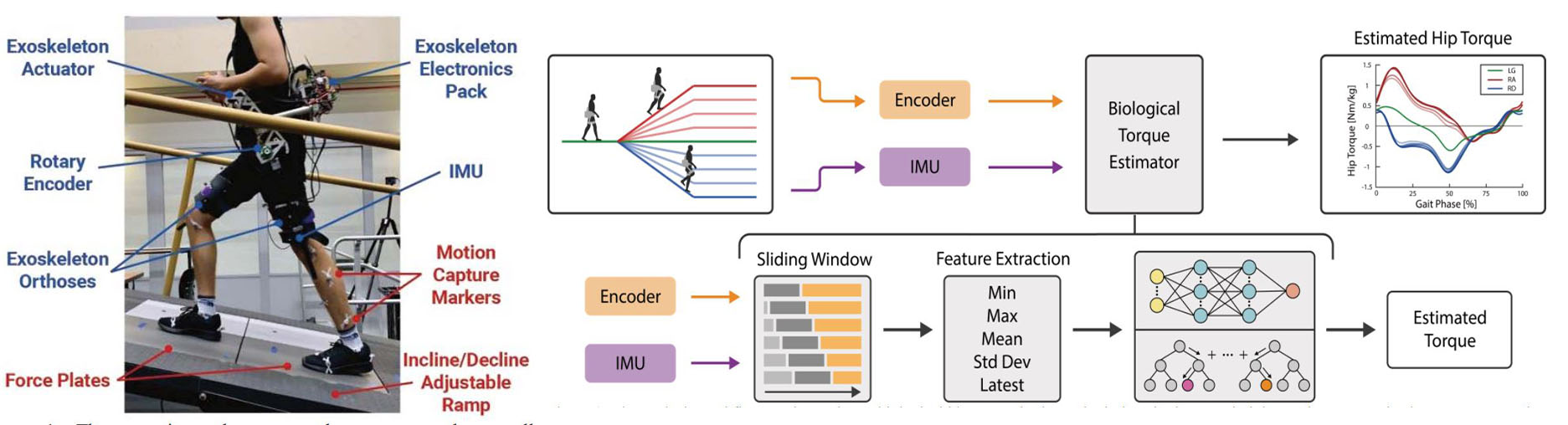

With the information about the state and ambulation, a controller can decide about switching to different configurations, adjusting the parameters that compute the final torque that the motors should provide. Furthermore, I have explored using similar machine learning techniques to predict the user's intended moment. As opposed to our previous walking state predictions, this method directly provides a torque setpoint signal connected directly to the actuators. This strategy could be beneficial in exoskeletons technology, where a fast and accurate knowledge of the user's desired torque is critical to achieving assistance in dynamic tasks. As a preliminary evaluation, we presented the biological hip torque estimation with an exoskeletons in the International Conference on Biomedical Robotics and Biomechatronics (BioRob).

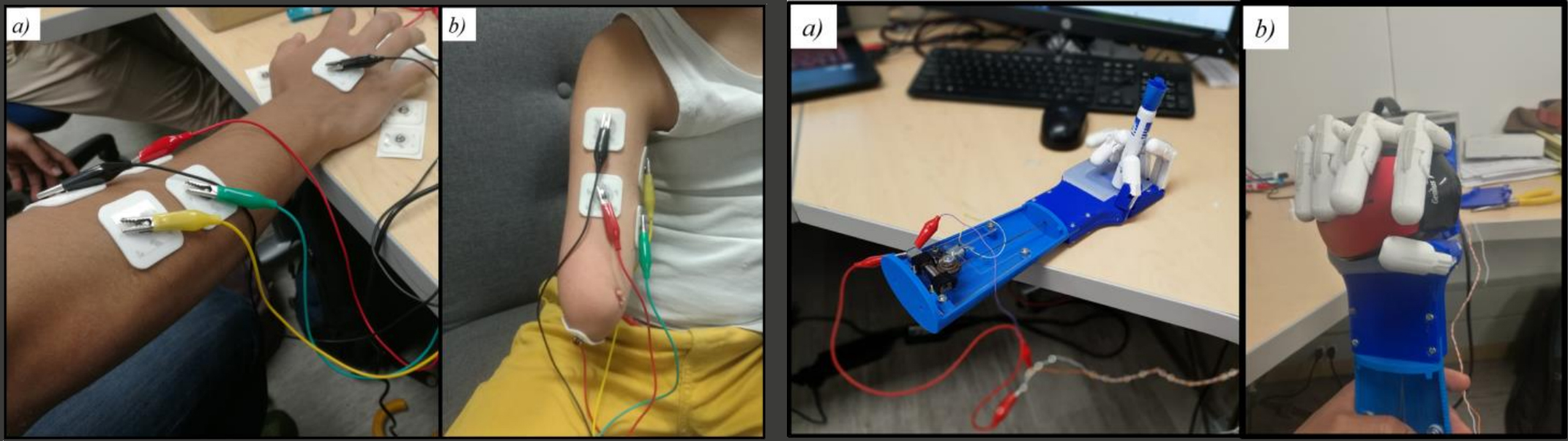

The feature analysis and model development that I have developed in my Ph.D. can be applied to other robotic systems. I have explored machine learning applications in the upper-limb, where the high number of degrees of freedom makes the problem quite challenging. In my article in Transactions on Neural Systems and Rehabilitation EngineeringI evaluated EMG feature sets and nonlinear models to classify more than 30 different gestures in simultaneous motions of elbow, wrist, and hand. Also, I enhanced my previous work in the underactuated hand project at Uniandes, helping Mario Benitez with his M.Eng. Master's Thesis to include EMG-based real-time pattern recognition in a hand prosthesis. This work was presented at the ASME International Mechanical Engineering Congress and Exposition (IMECE).